At the dawn of 2020, in case anyone in the stat/ML community is not aware yet of Francis Bach’s blog started last year: this is a great place to learn about general tricks in machine learning explained with easy words. This month’s post The sum of a geometric series is all you need! shows how ubiquitous geometric series are in stochastic gradient descent, among others. In this post, I describe just another situation where the sum of a geometric series can be useful in statistics.

Turning a sum of expectations into a single expectation

I also found the sum of geometric series useful for turning a sum of expectations into a single expectation, by the linearity of expectation. More specifically, for a random variable compactly supported on

,

where the sum can be infinite. Let us specify this with Beta variables: let with positive parameters

. Then the k-th moment of

(for a positive integer

) is

,

where denotes the Pochhammer symbol, or rising factorial,

.

On the one hand, the sum doesn’t look like an easy-going guy while turning it into a single expectation

reveals a more amenable expression since we can use the result

for any and

, and where

denotes the beta function. We conclude that the required series has a finite limit for

, in which case

which wasn’t trivial to me a priori.

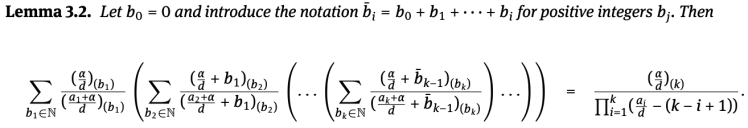

Where I’ve used this trick

In a joint work with Caroline Lawless, who was an intern at Inria Grenoble Rhône-Alpes in 2018 (now Ph.D. candidate between Oxford and Paris-Dauphine), we have proposed a simple proof of Pitman-Yor’s Chinese restaurant process from its stick-breaking representation, thus generalizing a recent proof for the Dirichlet process by Jeff Miller. One of the technical lemmas there was the one provided in the top picture, that we proved by (backward) induction with the identity obtained in the above section.

By the way, the stick-breaking is a distribution over the infinite simplex, i.e. a specific way to randomly break a stick of unit length into infinitely many pieces, , with positive

s, defined by

and

for

, where the

s are iid beta random variable. Which sounds like a stochastic version of Zeno’s paradox

mentionned by Francis.

Thanks for reading, and happy 2020 to all!

Julyan